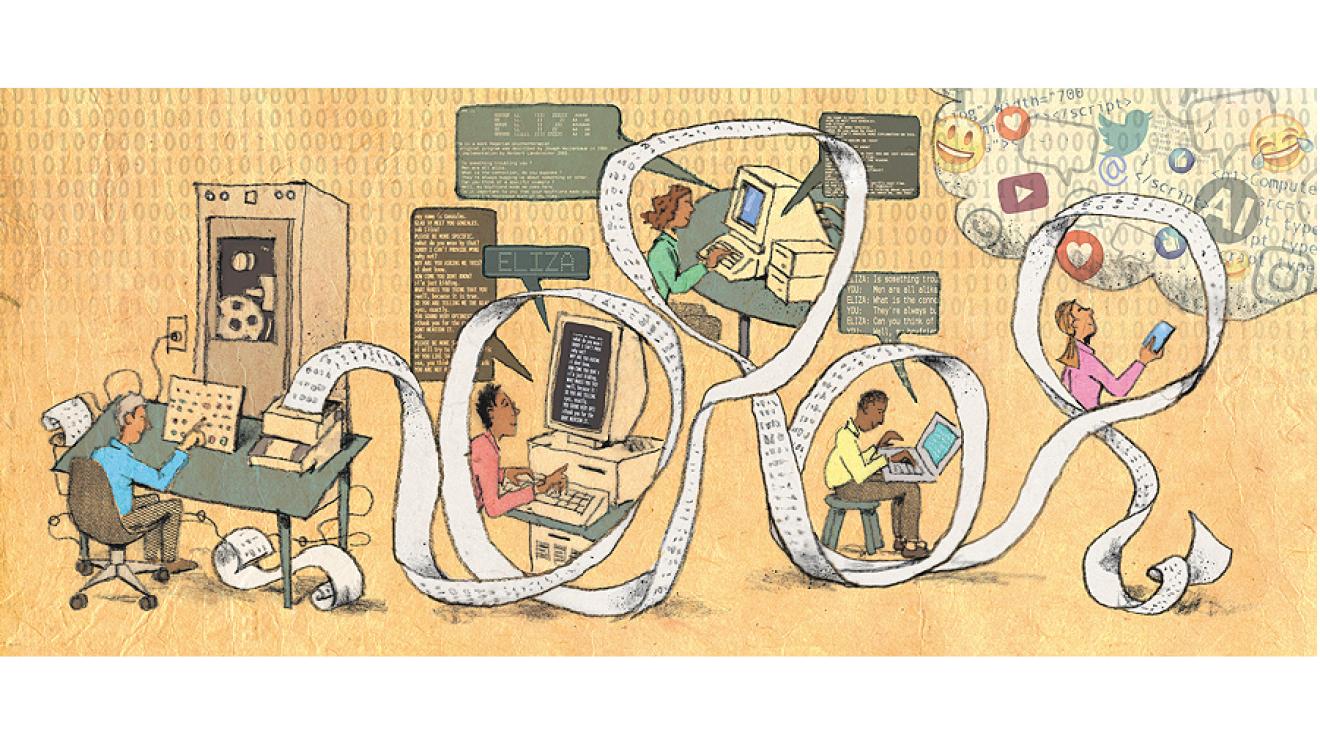

Click-click. Click-clack-click. Clack-click. In 1966, late at night and all alone, I sat down to take control of the PDP-4 computer in the Center for Cognitive Studies on the twelfth floor of William James Hall. Eighteen toggle switches on the console were grouped in threes to represent the octal digits 0–7 because the PDP-4 was a base-8 machine. So I set the switches by flipping them up and down, all down to represent 0, down-down-up to represent 001 or 1, 010 to represent 2, and so on. Flipping up sounded “click,” a little different from the “clack” of flipping down. The machine produced sounds and vibrations and rippling patterns of flashing lights—and sometimes the smell of burning electronics.

I was lucky. My first computer was a robustly physical thing, and my responsibility, at least to start, was limited to learning how the thing worked. Other students used other computers to solve real problems, for example, understanding how water flows around a ship’s hull or modeling the American economy. Those computers were less tangible and more oracular—you communicated with them by passing a question through a window in the form of a deck of punched cards, then coming back later to get an answer as a stack of paper with numbers printed on it. The machine answered the question you asked, which was often not the question you meant to ask. A misplaced comma might turn a meaningful question into gibberish, and, as cybernetician Norbert Wiener, Ph.D. 1913, put it, “The agencies of magic are literal-minded.” Users of batch-processing systems learned, like the Arabian Nights fisherman who released the genie from the jar, to be very careful what they asked for.

By contrast, spending time with an interactive standalone computer like the PDP-4 was like being the engineer in charge of a locomotive: the machine had a personality because it had a body you could feel and listen to. You could tell whether it was running smoothly by the way it sounded. One-inch punched paper tape was the PDP-4’s input medium. The tape was fan-folded, and the unread and already-read parts stood vertically in bins on opposite sides of the sensor lights. There was a gentle rhythmic sound as the segments unfolded from one bin and refolded in the other: sswhish, sswash, sswish, sswash. Unless you didn’t set the tape in the take-up bin properly at the beginning; in that case, the rhythmic sound dissolved into an angry crunch and the tape became an ugly mess of loops and squiggles. In those pre-miniaturization days, the ordinary operation of the central processor generated so much radiation that you would put a transistor radio on the console and tune it in between AM stations. From the other side of the room, the tone of the static indicated whether the machine had crashed or not.

These computers had their own magic, but with control of the whole machine, I quickly learned not to fear them. That first night, I laboriously set the toggle switches for the machine language instructions to compute 2+2, strings of 18 zeros and ones in a coded pattern the machine was built to obey. I stored the instructions in successive locations of the computer’s memory using the spring-loaded “deposit” switch, held my breath, and pressed start. Nothing happened. I went back and double-checked my work; it all seemed right. Then it dawned on me that something had happened—the answer, 4, was right where it belonged—but everything had just happened so quickly that I couldn’t see it. Having the machine to myself, I could “single-step” it using another switch and see that it was doing what I intended.

Those two lessons about working with computers have stood the test of time. Be careful what you ask them for. And it can be hard to tell what they are doing.

Before miniaturization made them all but disappear, computers were experienced as physical things. The 0s and 1s being pushed around inside them might represent imaginary worlds: computers were weavers of “pure thought-stuff,” as Fred Brooks, Ph.D. ’56, put it. But their magic had its limits because they didn’t work very well. Any illusion of spiritual embodiment was shattered when you had to clear up a jammed paper tape. If you were on a first-name basis with the mechanic who oiled the gears and adjusted the set screws, you were unlikely to attribute transcendent qualities to the machine even on the days when it worked perfectly.

But people were beginning to converse with computers without seeing them, and it turned out that even the flimsiest screen—between Dorothy the user and Oz the computer—seduced people into regarding the machine as human, or even wizardly.

ELIZA was the original chatbot, created by MIT’s Joseph Weizenbaum in the mid-1960s. Named after the reprogrammed flower girl of George Bernard Shaw’s Pygmalion (later the basis for the musical My Fair Lady), ELIZA crudely mimicked a psychiatrist who knew only how to twist the patient’s statements into new questions and nothing of the meaning of the words it was rearranging. Clumsy as it was, people would converse with ELIZA for hours—even knowing full well they were “talking” through a teletype to a computer. Weizenbaum was shocked when his own secretary asked him to leave the room, as though she wished to confide privately in a new, ever attentive, never judgmental friend. A Jewish refugee who had escaped Nazi Germany at age 13, Weizenbaum struggled to square the dual realities he had witnessed: that humans could treat other humans as subhuman and electronic machines as human. Weizenbaum spent the rest of his career, including a sabbatical he spent at Harvard writing Computer Power and Human Reason, trying to exorcise the monster he felt he had created—an apostate from the pioneering artificial intelligence fervor of his MIT colleagues.

Which brings us to the current flowering of artificial intelligence, after decades of false starts and broken promises. To be sure, many human-computer interactions remain clumsy and frustrating. But smart computer systems are permeating our daily lives. They exist invisibly in the “cloud,” the ethereal euphemism masking their enormous energy demands. They have literally gotten under the skin of those of us with implanted medical devices. They affect and often improve life in countless ways. And unlike the unreliable mechanical contraptions of yore, today’s computers—uninteresting though they may be to look at if you can find them at all—mostly don’t break down, so we have fewer reasons to remember their physicality. Does it matter that the line between humans and the machines we have created has so blurred?

Of course it does. We have known for a long time that we would eventually lose the calculation game to our creations; it has happened. We are likely to lose Turing’s “Imitation Game” too, in which a computer program, communicating with a human via typed text, tries to fool the user into confusing it with a human at another keyboard. (ChatGPT and its ilk are disturbingly convincing conversationalists already.)

Our challenge, in the presence of ubiquitous, invisible, superior intelligent agents, will be to make sure that we, and our heirs and successors, remember what makes us human.

As if that challenge were not enough, another computer-based technology is making it harder to distinguish humans from machines: that is, the screens through which we spend our waking hours conversing with computers and humans alike. Our cell phones are a single portal for banking and love letters, for shopping and flirting and bullying. Just as it is so much easier now to retrieve a library book—click-click, no riding the bus or missing the closing hour—so it is easier than ever to say something mean or cruel, clever or suggestive. Click-click, no awkwardness—you don’t have to look the other person in the eye. The shatterproof glass shields us from our correspondent’s emotions—a human Elizabeth gets no more empathy than ELIZA demanded. Children are especially vulnerable to the inhuman seduction of communicating with others while insulated from their reactions.

Computers can make perfect decisions if they have all the facts and know how to assess the tradeoffs between competing objectives. But in reality, the facts are never fully known; judgments always require evaluation of uncertainties. And it takes a whole human life, and a well-lived life at that, to acquire the values needed to make judgments about our own lives and those of other people. Any particular failure of automated decision-making can be patched, of course. Air Canada’s AI chatbot recently provided a customer incorrect information about the cancellation policy for special bereavement-fare tickets. The airline was shamed into providing a refund after first trying to attribute to the chatbot a mind of its own, “responsible for its own actions.” Given the adverse publicity, that chatbot has probably been reprogrammed not to make the same mistake again. But the attempted anthropomorphism was a profit-driven dodge. The airline built the thing that offered the refund guarantee; the chatbot did not, and could not, know what it means to die, or to lose a loved one, or how good people treat others in that state of emotional trauma—or what a good person is.

All computers can do is pretend to be human. They can be, in the language of the late philosopher Daniel Dennett ‘63, counterfeit humans.

But aren’t computers learning to be counterfeit humans by training on lots of data from observations of actual human behavior? And doesn’t that mean that in the long run, computers will make decisions just as people do, only better, because they will have been trained on all recorded human experience? These questions are not trivial, but their premise is mistaken in at least two ways.

The first error is suggesting that computers can be digitally trained to be superior versions of human intellects. And the second is inferring that human judgment will not be needed once computers get smart enough.

Learning first. How do we become the people we are? Not by training a blank slate with discrete experience data. Our brains start off prewired to a significant if poorly understood degree. And then we learn from the full range of human experience in all its serendipitous contingency. We learn from the feel of an embrace and taste of ice cream, from battle wounds and wedding ceremonies and athletic defeats, from bee stings and dog licks, from watching sunsets and riding roller coasters and reading Keats aloud and listening to Mozart alone. Trying to train a computer about the meaning of love or grief is like trying to tell a stranger about rock and roll.

And not just from all these life experiences of our own but from the experiences of all our cultural ancestors. Our teachers—all those from whom we have learned, and those departed souls who taught our teachers—have shaped all those experiences into a structure of life, a system of values and ideals, a way in which we see and interpret the world.

No two of us see the world exactly the same way. Within a culture there are commonalities, but divisions and disputes both within and among groups are fodder for ethnic and religious enmities and civil and international wars.

We will never rid ourselves of the burden of deciding on values and then sorting out our differences. Just as an airline can’t escape responsibility for the advice its chatbot gives, no AI system can be divorced from the judgments of the humans who created it.

It is easy to train a computer to mimic an evil human—that is already happening in sectors where it is profitable. But how could computers be trained to value civilization itself, with so little agreement among humans about what that entails? Some of us hope for ascendency of Enlightenment values—for example, “to advance learning and perpetuate it to posterity” as the founders said of creating Harvard College on the new continent. But what would be the training data set for a superintelligence that would decide what exactly that means—what of civilization to preserve, and what to abandon or vanquish? And which humans could be trusted to frame the query?

Computer scientists, economists, and statisticians have perfected the art of optimal decision-making under defined constraints. But understanding what it means to be human, valuing joy as well as utility, grappling with the moral significance of competing values—these are the domain of the humanities, the arts, philosophy, the challenging, heart-wrenching, soul-searching, exhilarating, character-building, sleepless-night process of a well-rounded liberal education.

Our hope for the beneficial development of AI rests on the survival of humanistic learning.

Today’s AI-giddy techno-optimists and techno-pessimists might heed Henri Poincaré’s caution: “The question is not, ‘What is the answer?’ The question is, ‘What is the question?”’ The humanities have pressed the great questions on every new generation. That is how they differ from the sciences, a literary scholar once explained to me; humanists don’t solve problems, they cherish and nurture them, preparing us and our children and grandchildren to confront them as people will for as long as the human condition persists. Our hope for the beneficial development of AI rests on the survival of humanistic learning.

“There is intelligence in their hearts, and there is speech in them and strength, and from the immortal gods they have learned how to do things.” So wrote Homer some 2,800 years ago of golden robots forged by the divine blacksmith Hephaestus. But these demigod automata knew who was boss. “These stirred nimbly in support of their master,” the Iliad continues. Only hubristic humans could think that their counterfeits might completely substitute for human companionship, wisdom, curiosity, and judgment.