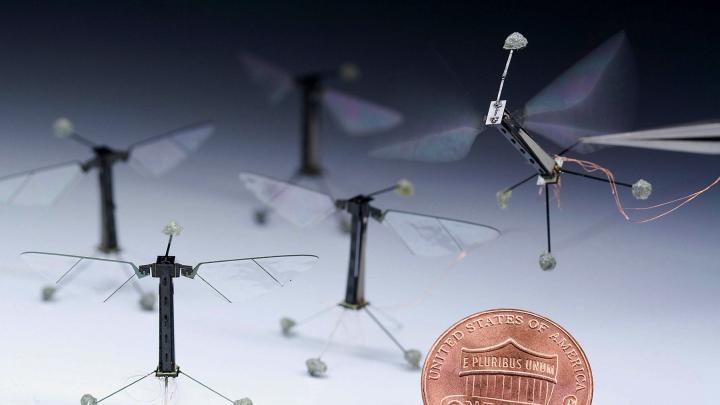

One day nearly a decade ago, Gu-Yeon Wei was walking the corridors of Harvard’s newly established School of Engineering and Applied Sciences when he passed the office of Robert Wood. Wood had just made a splash in the engineering world by successfully demonstrating the first robotic fly. Wei, an electrical engineer, designs computer chips; lost in thought, he’d been contemplating the approaching deadline for a five-year, $10-million “Expeditions in Computing” grant from the National Science Foundation. These grants, for “ambitious, fundamental research agendas that promise to define the future of computing and information technology,” are among the largest investments the NSF makes in computer science and engineering, and they encourage combining the creative talents of many investigators to achieve transformative, disruptive innovations.

“I saw the fly,” Wei recalls. “I had just read an article about the colony-collapse disorder afflicting honeybees. He knocked on Wood’s door, and made a pitch on the spot: Let’s build robotic bees. “You have the body,” he told Wood. “David [Brooks, a computer-systems architect], and I could build the brain. Let’s get a couple of other people to work with us. They can do colony.”

Wildly ambitious, the project united 12 principal investigators (PIs), including Wood, the Charles River professor of engineering and applied sciences, and Wei, Gordon McKay professor of electrical engineering and computer science, who faced 12 impossible engineering tasks. “You’ve got to actually build the robots using unknown aerodynamics and unknown manufacturing techniques, subject to impossible weight and power limits, and with non-existing power sources,” explains Justin Werfel, who worked on the colony aspect of the project. “And then the bees have to follow unknown algorithms to coordinate as a group.”

Ostensibly, the goal of “RoboBees: A Convergence of Body, Brain, and Colony,” was to create a swarm of flying microbots that could pollinate flowers. But this envisioned application was more a motivating framework than a principal aim. The potential applications are varied and compelling, ranging from search and rescue, to surveillance, to environmental monitoring.

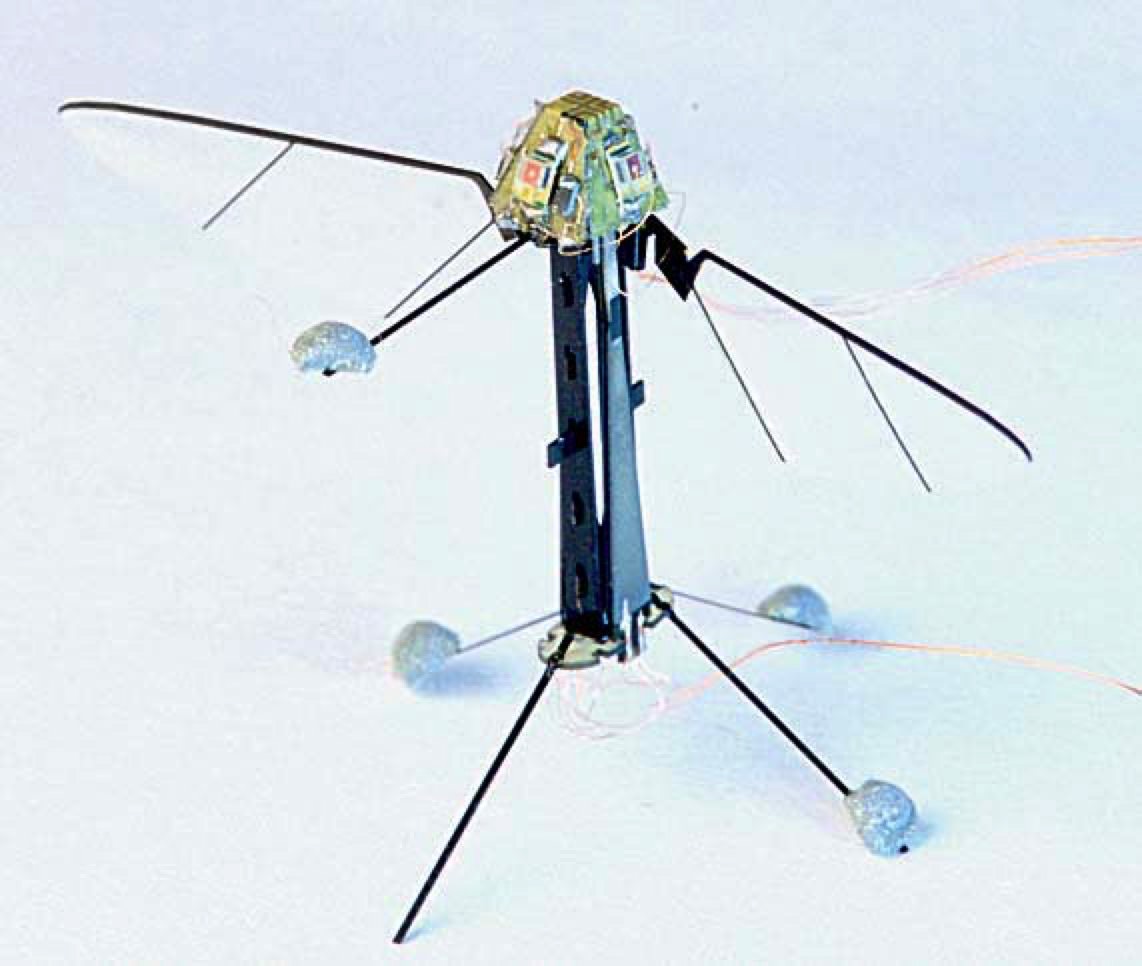

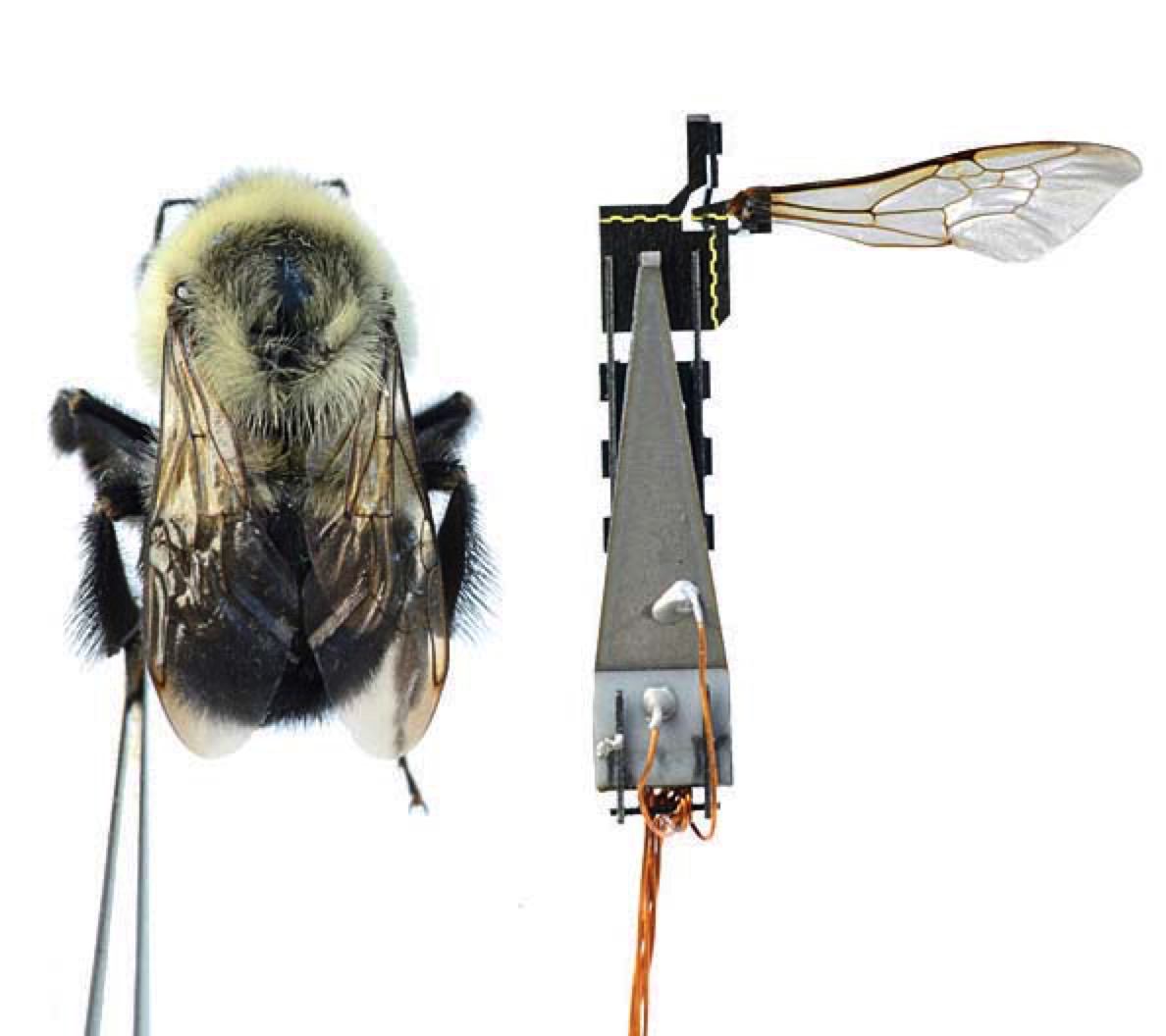

In fact, the primary purpose was to advance the limits of robotic engineering on multiple fronts, under extreme constraints in terms of parameters such as size, weight, power, and control. Extraordinary advances in applied science and engineering would be necessary in order to create “insects” as small as bees that could navigate, communicate, and function as part of a collective. Wood’s fly (see “Tinker, Tailor, Robot, Fly,” January-February 2008, page 8), marvelous as it was at the time, remained tethered to a wire that provided power and control. To achieve autonomous function, the researchers would have to solve problems ranging from the mechanics of flight at that scale, to the intricacies of mass manufacture, to miniaturization of onboard sensors, to the understanding and programming of group behavior—many requiring substantial advances in basic science and engineering.

Wei’s initial serendipitous proposition, inspired by nature, and now delving into realms of engineering unimaginable even a few years ago, has advanced technologies now filtering into medicine, electrical engineering, computing, and consumer electronics by pushing the engineering requirements to their limits.

Impressing Archimedes

From the outset, the researchers divided the RoboBees work into the three parts indicated by the proposal’s title: body, brain, and colony. The challenge began with the daunting physical constraints imposed by insect-scale robotic flight. How should the wings be shaped? How fast should they move? Where would the power come from? How could these tiny but complex robots be manufactured at scale?

Because flight is energy-intensive, weight was a limiting factor that guided the design’s development. Conventional motors were excluded immediately: they are heavy, explains Wood, and at insect-size scales, forces of friction on gears and bearings consume an unacceptable proportion of the energy they use. He focused instead on the development of highly efficient actuators made of piezoelectric materials (solid substances typically used to convert mechanical energy into electricity) that could, merely by the application of an electric field, make the wings oscillate in a motion akin to treading water.

There is piezoelectric material in virtually every backyard grill in America. Turn on the gas, press the button, and “poof,” with a muffled explosion, the grill ignites—because the built-in crystalline piezoelectric materials emit an electric charge in the form of spark when their structure is perturbed by the physical force of pressing the button.The wings of a RoboBee beat by reversing that principle: applying an electrical field to a piezoelectric material causes the crystalline structure to deform, minutely. Although this motion is nowhere near enough to flap a wing, Wood has overcome that limitation by building a series of connected, origami-inspired folds that connect to the hinges of the wings. Each fold acts like a tiny lever, amplifying the initial minuscule piezoelectric deformation by building on the amplification of the one before until the wings “beat.” Archimedes would have been impressed.

There is piezoelectric material in virtually every backyard grill in America. Turn on the gas, press the button, and “poof,” with a muffled explosion, the grill ignites.

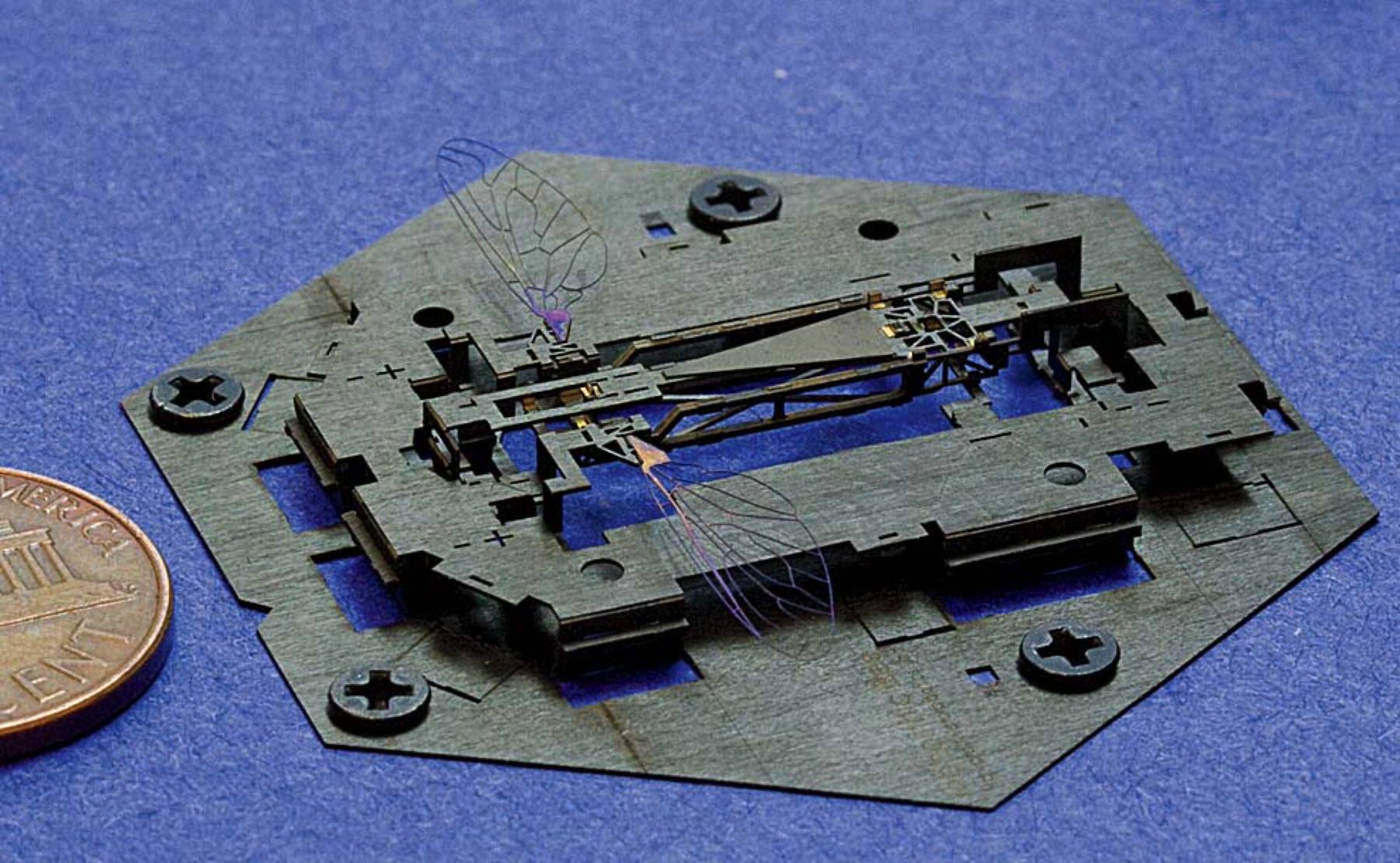

The ingenuity of this solution becomes even more evident in the manufacturing process—each bee is laser machined from alternating layers of soft, flexible polymers and hard carbon fiber. Wherever the rigid carbon layers are further cut away to expose a pliant polymer layer, the laminated construction of the insect leads to a series of flexure joints that allows the bee to take three-dimensional shape as it is lifted from the substrate—like a page in a children’s pop-up book. Manufacturing complex machines with tiny, integrated piezoelectric motors in this way is expected to lead to a new generation of minimally invasive microsurgical tools, says Wood, who has been exploring collaborations with surgeons in one of Harvard’s teaching hospitals and with partners in industry.

Courtesy of the Harvard School of Engineering and Applied Sciences

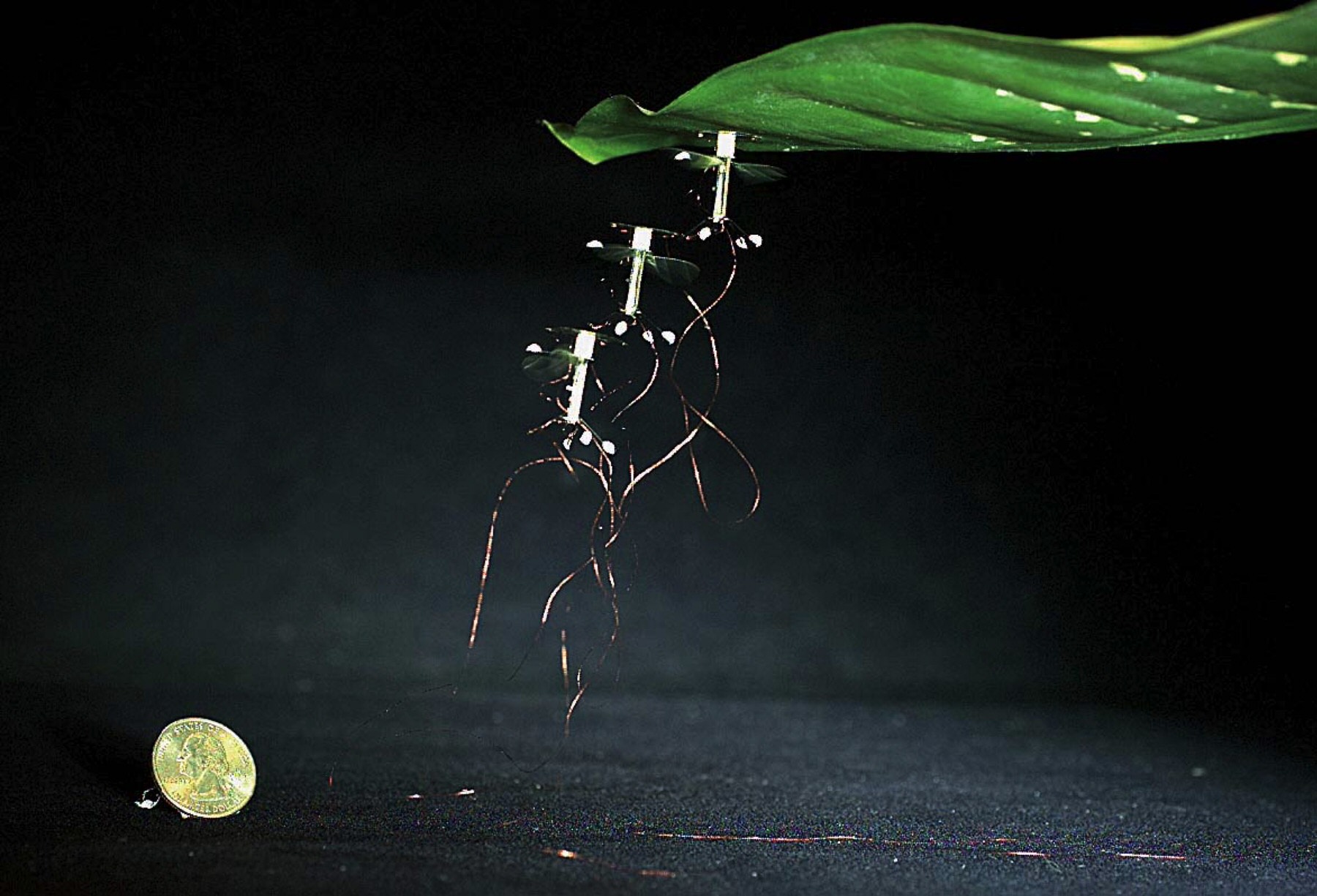

Not all the challenges inherent to building the bee have proven as tractable. Shrinking an energy source, even one that will let the bee fly for just a minute or two, is exceedingly difficult. Given the voltage requirements, wing shape, flapping frequency, battery weight, and so on, Wood calls powering the RoboBee “a complex, multi-dimensional optimization problem.” He and Wyss professor of biologically inspired engineering Jennifer Lewis (see “Harvard Portrait,” November-December 2013, page 62 and “Building Toward a Kidney,” January-February, page 37), are on the cusp of developing a 3-D printed lithium-ion battery small and light enough for onboard integration. Simultaneously, he is exploring the use of ultra-thin solar panels for recharging batteries (to keep the bees aloft using solar power alone would require 30 percent more sunlight than reaches the Earth’s surface now—or significantly better photovoltaics). Wood’s research group has also developed a technology for use in conjunction with an energy-conserving strategy: perching. An electro-adhesive material that clings to surfaces when a small current is applied allows the RoboBee to perch virtually anywhere, from a wall to the underside of a leaf; it could then recharge before moving to a new location.

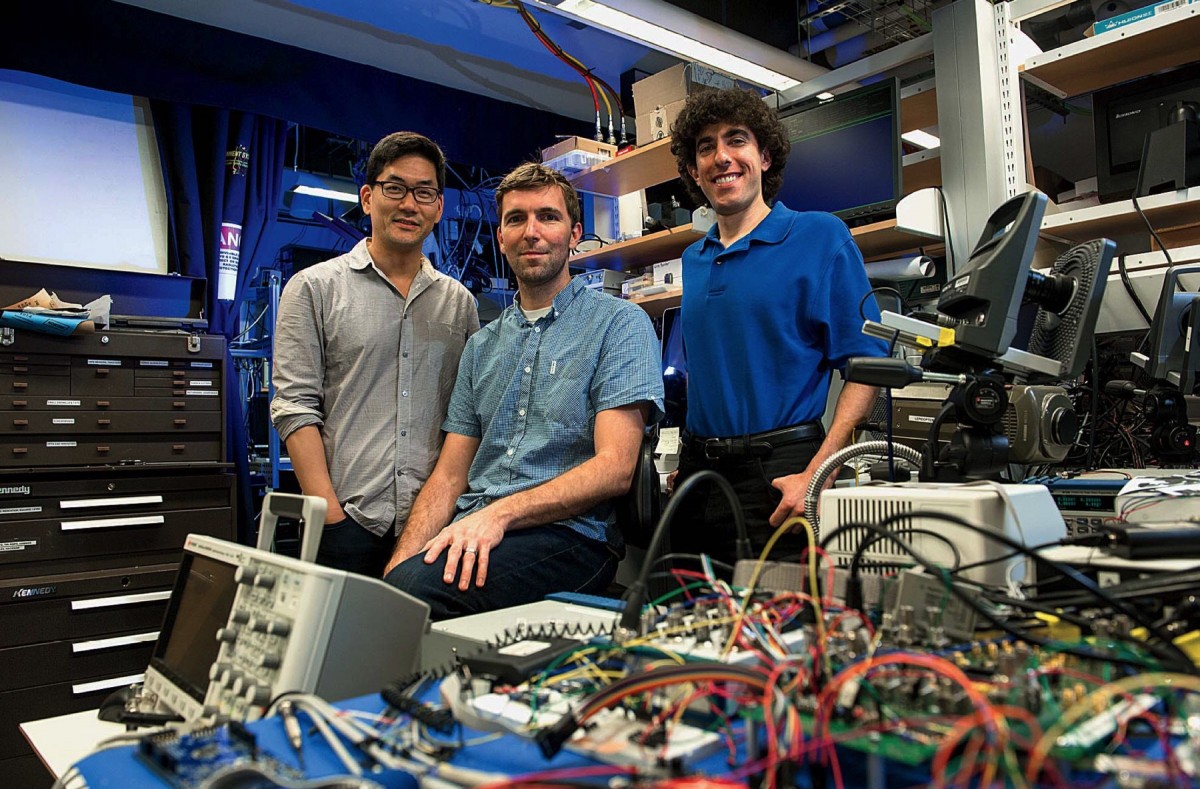

Because the RoboBee project involved many distinct engineering challenges, solutions could not be worked out seriatim: parallel processing was mandatory. The other teams therefore took their cues from Wood’s initial fly design, says David Brooks, who worked with Wei to develop the RoboBee’s brain.

Building a Brain

When you drive along a highway at 60 miles per hour, the adjacent roadside flashes by in a blur of green leaves, brush, cement. When you turn to rubberneck, individual objects spring into focus—but only for a second. How can the driver of a car stay centered in one lane of a highway without have to look left and right constantly to maintain position? The answer is optical flow—and bees use the same principle to navigate.

“Think of yourself as walking down a corridor,” explains Brooks, the Haley Family professor of computer science. Peripheral vision indicates the sides of the hallway. “Optical flow doesn’t try to recognize the hallway itself. It just tries to recognize the motion that you see as you pass through it. If you’re closer to the right side, you’ll feel like things on that side are moving faster. If you’re closer to the left, you’ll feel that side’s moving faster. And if you’re right in the middle, you’ll feel that it’s equal.” In experiments, researchers have coaxed honeybees to fly down a chute with a repeating black and white pattern on the walls. As they fly, the bees sense the speed of the alternating pattern, and try to balance the flow by staying in the middle, because they don’t want to bump into a side wall. When researchers change the pattern frequency in order to simulate a change in speed, they observe that the bees drift to the left or the right to maintain balance. “That’s how we know these bees are actually using optical flow to guide themselves,” Brooks explains. In the RoboBee, he says, “We want to use the same optical-flow strategies.”

The approach has several advantages. One of the RoboBee’s onboard sensors is a tiny camera. “If we didn’t use optical flow,” says Brooks, “we probably would use some other kind of optical sensing based on trying to take a picture of a wall, detecting that it’s a wall, and trying to avoid it.” But the camera would be taking millions of photographs for analysis continuously, which would be computationally expensive. “Flow is an attractive algorithm because it is much simpler to calculate,” he explains. “You don’t need a lot of pixels” to detect the simple contrast differences on which optical flow relies. That means the cameras can be quite simple, small, lightweight, and very low power, and the bee’s brain doesn’t have to perform high-resolution computation on the pixel data.

Optical-flow data will be combined with information from an inertial motion unit (IMU) that combines an accelerometer, gyroscope, and magnetometer—devices that provide data about acceleration, rotation, and the direction of true north. These units, now common in cellphones, have become small enough, Wei says, that they were bought off-the-shelf for use in the RoboBee. In combination, these sensors will allow the bee to achieve stable flight—at least in theory.

Like real flies, RoboBees are inherently unstable. That’s one reason flies are so difficult to swat.

Like real flies, RoboBees are inherently unstable. That’s one reason flies are so difficult to swat—they can change direction in an instant, taking advantage of their natural tendency to pitch and yaw in unexpected ways. In the RoboBee, such unpredictability is less desirable, so it will fall to a custom microprocessor—a computer system on a chip (SOC) nearly 10 times as energy-efficient as a general-purpose microprocessor—to keep the bee aloft. The work on this “brain” SOC, developed specifically by Brooks and Wei for the RoboBee’s extreme weight and power constraints, began in 2010, and culminated in the production of a chip just 40 nanometers—or billionths of a meter—across in 2015. Unusually, all the components needed to run the microprocessor as a freestanding system, which are normally developed separately, were integrated, allowing the team to minimize the system’s overall weight.

Still, this integrated system’s power management presented its own unique problems. The chip runs on just under one volt of power (anything more could destroy the sensitive electronics), but the robot’s piezoelectric wing actuators require as much as 300 volts. A series of students advised by Wei, Wood, and others figured out how draw power from a 3.7-volt battery, ramp it up to 300 volts for one application, and tamp it down to just 0.9 volts for the system’s brain—all using as few of the standard tools of microelectronics (such as resistors, inductors, and capacitors) as possible.

In fact, the system they developed for ramping up the voltage for piezoelectric actuators, like those that drive the RoboBee’s wings, represents another example of the project’s technological spin-off effects. General Electric uses a similar technology to drive tiny fan-like bellows for cooling electronics, but those are built from discrete components that make them much larger than the RoboBee’s integrated version. Wei believes the newer technology could find numerous commercial applications, perhaps at GE, and elsewhere.

But the ability to learn is probably the most stunning advance Wei and Brooks have incorporated into their latest chip design. Just 16 nanometers across, about the size of the flu virus, the chip integrates a deep neural network (DNN), a design that mimics the architecture of the brain’s many connections among neurons, and therefore has the ability to do many things at once in real time. DNN-powered computers are far better than traditional ones at tasks such as image recognition, and have the capacity to learn by example or by doing. (Last year, a program running on a DNN that had been trained to play Go, a game many times more complex than chess, became arguably the best player of the game in history after watching humans play and then playing simulations against itself.)

| Photograph by Nick Gravish/ Harvard School of Engineering and Applied Sciences

The implications are profound. DNNs can enable speech recognition, potentially enabling voice control of RoboBees. And because DNNs can learn by doing, they may enable the RoboBee to teach itself to fly.

“Ever since we started looking into deep learning, I’ve had this dream,” says Wei. “I’d say to David [Brooks], ‘It’s so hard to set all the parameters just right in order to be able to get this bee to fly in a stable way.’ From what I hear,” he continues, “Rob’s students run mini experiments, where the wings flap, and the bee turns over this way, and flips over that way, and they tune this variable and that, and then over time—and it really takes an instinct to be able to do it just right for that particular bee—they can stabilize it. And I thought, ‘You know, we ought to be able to teach these bees how to fly. There must be some way. Why can’t we use neural networks to implement the flight-control algorithms?’” Instead of human-tuned parameters, the bees would attempt flight in a motion-capture lab (a box equipped with high-speed cameras) and over time, using the deep neural network, learn how to fly based on this feedback, in the same way that training wheels help teach a child how to ride a bike. (The NSF recently provided funding to pursue this idea as part of a larger project Wei unofficially calls “RoboBees 2.0.”)

There will be many commercial applications for the technology, he explains. The DNNs that Google or Apple now run in the cloud to enable speech recognition services such as Siri, for example, require “massive” computing capacity. What if such a system could run on a tiny chip in home coffeemakers or televisions? With this kind of locally processed speech recognition, the privacy issues that now plague implementations of the Internet of Things—connected household appliances—would vanish. No one would have access to personal data revealing what time people wake up or how much time they spend watching TV—or have to worry about electronic eavesdropping on a conversation by the water cooler.

But the applications of the RoboBee itself, whether for search and rescue (seeking body heat or CO2 signatures in collapsed buildings), or as mobile sensor networks that could move and collect data before returning to a central location, all rely on the idea that no RoboBee will operate alone.

“Doomed to Succeed”

The bee is not designed as a failure-proof, single machine. Instead, the vision is to deploy hundreds or thousands of these tiny robots—a swarm—that will complete a task as a collective entity. It is a powerful idea, because it means that success does not depend on the fate of a single RoboBee. If 20 crash, flying as part of a hive of a thousand whose mission is to locate a single object, the search will not be compromised. But programming and coordinating the actions of many robots is a challenge unto itself.

Sheep brains floating in jars of slightly cloudy preservative line the shelves in the office of Justin Werfel. He runs Harvard’s Designing Emergence Laboratory, which studies and designs the simple rules that govern the behavior of individuals within a group, but that lead to complex collective behaviors. “We study these systems from both a scientific and an engineering standpoint,” Werfel explains. “Can we predict the collective outcome from the rules the individual agents follow? Can we design low-level behaviors that guarantee a particular high-level result?”

Models of robot colonies that perform construction projects adorn his desk, and five multicolored juggling balls rest by his keyboard (he can juggle all five—at least for a moment—and ride a unicycle).

Models of robot colonies that perform construction projects adorn his desk, and five multicolored juggling balls rest by his keyboard (he can juggle all five—at least for a moment—and ride a unicycle). Werfel, a senior research scientist at the Wyss Institute for Biologically Inspired Engineering, writes algorithms that control how individual robots behave, and studies the sometimes surprising outcomes of their collective behavior. Herds, swarms, schools, and colonies are the biological inspiration for his work. The sheep brains are a playful nod to that; almost all his research is done on a computer. His lab works closely with the self-organizing systems research group headed by Kavli professor of computer science Radhika Nagpal. Together, they study how complex properties emerge from the decentralized actions of simple agents. But none of their colony work, led by Nagpal, used actual RoboBees, he explains, because the bees didn’t yet exist.

Instead, they developed numerous simulations and relied on complex systems theory, he explains. At times, they drew upon proxies, from tiny helicopters to an antlike swarm of terrestrial kilobots whose coordinated actions successfully moved a cutout of a picnic basket from one place to another.

As part of the project, Werfel studied patterns of communication and subsequent action among honeybees. Although much has been made of the way honeybees communicate the location of pollen resources via a “waggle dance,” Werfel and his student collaborator wanted to know how many bees, after receiving this information, actually went to the location described. Prior research had shown that many bees ignore the instructions and return instead to locations where they have successfully collected pollen before. In other words, the dance was effectively received simply as a message that pollen was available. From a systems perspective, Werfel and his student were able to show that this apparent deviance makes sense: if every bee went to the same location, the pollen there would soon be depleted, and the hive as a whole would suffer. In essence, they teased out an insight—about the advantages of avoiding absolutes in rule-making—that could optimize the writing of software programs governing collective behavior.

Werfel’s interest in the subject began two decades ago, when as a physics undergraduate he began reading about mound-building termites, insects he finds extraordinary: “You’ve got millions of independent insects, and none of them knows what the others are doing. They don’t know what the mound looks like. They don’t know what they’re trying to build. They don’t know where they are. They’re not getting assigned instructions from the queen. And they’re blind. And somehow, all of them following whatever rules they’re presumably following, all of them doing whatever they’re doing, you wind up getting these huge-scale, complicated structures.”

TERMES from Wyss Institute on Vimeo.

But what are those instructions? Werfel has been probing this question by studying natural populations of termites, and by running simulations, experiments, and mathematical analyses with robotic termites (the TERMES system) that can build structures based on simple rules of his devising. In the first instance, he is studying two virtually identical species of termites that live near each other in Namibia. Even experts with microscopes and field guides in hand, he explains, have difficulty telling them apart based solely on their morphology. One species, Macrotermes michaelseni, builds “striking spires,” while the other, Macrotermes natalensis, he says, constructs low, “lumpy nothings.” By studying their behavior under controlled conditions, Werfel hopes to tease out the differences in the rules that govern their behavior.

In complementary work, he started with the very simplest artificial systems he could devise, involving two dimensions. His robots were instructed simply to build a plane, a flat surface, with no holes in it. In an iterative process of trial and error, he began to understand how instructions could lead to surprising patterns in group behavior. Even the three-dimensional structures built by TERMES robots followed the simplest of rules. To avoid getting stuck in a situation where they can’t keep building, the robots are instructed, before adding a brick, to check where material is already present around them: for every site that a robot could have just come from, the stack of bricks there must either be one brick higher than the stack the robot is on now, or be the same height, if the blueprint calls for no further bricks to be added there. For every site the robot might visit next, the stack has to be the same height as the one the robot is leaving.

“Why that should work is not obvious,” Werfel adds. But the advantage of working on collective construction, as he calls it, is that the resulting structure—the thing that gets built—is the physical manifestation of the emergent behavior, there for everyone to see in all its glory, or failure. “There’s a saying in robotics,” he says: “ ‘Simulations are doomed to succeed.’ When you try it with real hardware, you always learn things you don’t expect.”

The study of emergent behavior, and of the programming of individual agents, whatever the group goal might be, seems likely to find application in human realms sooner than expected. It doesn’t take a big cognitive leap to perceive the analogies between tiny mobile robots and the autonomous vehicles, already being road-tested, that operate by a set of rules little different from those Werfel runs in his simulations.

Developing rules for the collective behavior of RoboBees depends on the mission they are asked to accomplish, which in practical terms remains undefined. But applications have never been the driving force or the measure of the project’s achievements. “We can build the RoboBee at insect-scale,” says Wood. “We understand the dynamics, and we know how to navigate in this complex design space. We understand actuation, we have good solutions to the power electronics, and we understand the fluid mechanics of the wings. We have developed sensors that can stabilize flight, so the bee can hover and land.”

He is optimistic that the bee can be trained for full flight using deep neural networks. The biggest remaining challenge is integrating all the components into a single flying bee. That seems like a simple final step, but Wei, for one, questions whether it will ever happen. “The research has been done,” he says, “and the bee is a solved problem in some sense.” What remains—“Tinkering, and fighting engineering problems—which is not as interesting to academics”—he thinks may be better left to industry.

It is the next hard problem that engages the passion and creativity of these engineers. Werfel is already thinking about how to build swarms of robots that could live in the human body, clearing arterial plaque, for example, by using DNA as a building block for tiny intravascular machines. Wood, while committed to seeing the discoveries spawned by the Robobee project applied to medicine and manufacturing, is engaged in a complementary new realm, too: how to build and control soft robots, with no hard moving parts, that can safely interact with biological tissues and organisms, even in extreme high-pressure environments like those found in the deep oceans. Brooks and Wei aspire to build tiny, low-power computer chips that could use speech and vision to control not only a bee, but anything one can imagine. It might be called “Robobees 2.0.” says Wei. Or anything, really. The attendant limits in a name are there, it seems, only to inspire fresh innovation.